On 'measuring' interdisciplinarity: from indicators to indicating

Indicators of interdisciplinarity are increasingly requested. Yet efforts to make aggregate indicators have failed due to the diversity and ambiguity of understandings of interdisciplinarity. Instead of universal indicators, we propose a contextualised process of indicating interdisciplinarity.

Interdisciplinary research for addressing societal problems

In this blog I will share some thoughts developed for and during a fantastic workshop (available here) held last October by the US National Academies to help the National Science Foundation (NSF) set an agenda on how to better measure and assess the implications of interdisciplinarity (or convergence) for research and innovation. The event showed that interdisciplinarity is becoming more prominent in the face of increasing demands for science to address societal challenges. Thus, policy makers across the globe are asking for methods and indicators to monitor and assess interdisciplinary research: where it is located, how it evolves, how it supports innovation.

Yet the wide diversity of (sometimes divergent) presentations in the workshop supported the view that policy notions of interdisciplinarity are too diverse and too complex to be encapsulated in a few universal indicators. Therefore, I argue here that strategies to assess interdisciplinarity should be radically reframed – away from traditional statistics towards contextualised approaches. Thus, I suggest to follow recent publications in proposing two different, but complementary, shifts:

From indicators to indicating:

- An assessment of specific interdisciplinary projects or programs for indicating where and how interdisciplinarity develops as a process, given the particular understandings relevant for the specific policy goals.

From interdisciplinarity to knowledge portfolios:

- An exploration of research landscapes for addressing societal challenges using a portfolio analysis approach, i.e. based on mapping of the distribution of knowledge and the variety of pathways that may contribute to solving a societal issue – interdisciplinarity.

Both strategies reflect the notion of directionality in research and innovation, which is gaining hold in policy. Namely, in order to value intellectual and social contributions of research, analyses need to go beyond quantity (scalars: unidimensional indicators) and to take into account the orientations of the research contents (vectors: indicating and distributions).

The failure of universal indicators of interdisciplinarity

In the last decade, there have been multiple attempts to come up with universal indicators based on bibliometric data. For example, in the US, the 2010 Science & Engineers Indicators (p. 5-35) reported a study commissioned by the NSF to SRI International which concluded that it was premature ‘to identify one or a small set of indicators or measures of interdisciplinary research… in part, because of a lack of understanding of how current attempts to measure conform to the actual process and practice of interdisciplinary research’.

In 2015, the UK research councils commissioned two independent reports to assess and compare the overall degree of interdisciplinarity across countries. The Elsevier report produced the unforeseen result that China and Brazil were more interdisciplinary than the UK or the US – which I interpret as an artefact of unconventional (rather than interdisciplinary) citation patterns of ‘emergent’ countries. A Digital Science report, with a more reflective and multiple perspective approach, was interestingly titled: ‘Interdisciplinary Research: Do We Know What We Are Measuring?’ and concluded that:

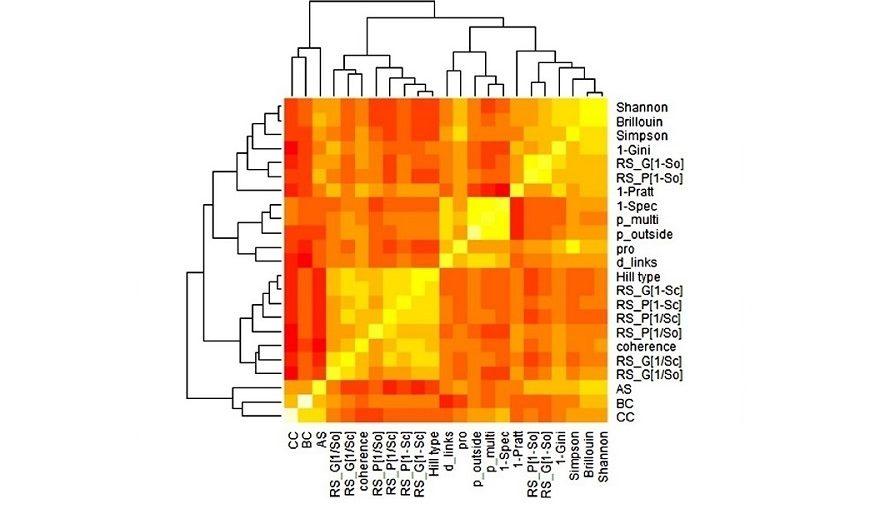

Wang and Schneider, in a quantitative literature review ‘corroborate[d] recent claims that the current measurements of interdisciplinarity in science studies are both confusing and unsatisfying’ and thus ‘question[ed] the validity of current measures and argue[d] that we do not need more of the same, but rather something different in order to be able to measure the multidimensional and complex construct of interdisciplinarity’.

A broader review on evaluations of interdisciplinarity by Laursen and colleagues also found a striking variety of approaches (and indicators) depending on the contexts, purpose and criteria of the assessment. They highlighted a lack of ‘rigorous evaluative reasoning’, i.e. insufficient clarity on how criteria behind indicators relate to the intended goals of interdisciplinarity.

These critiques do not mean that one should disregard and mistrust the many studies of interdisciplinarity that use indicators in sensible and useful ways. The critiques point out that the methods are not stable or robust enough, or that they only illuminate a particular aspect. Therefore, they are valuable but only for specific contexts or purposes.

In summary, the failed policy reports and the findings of scholarly reviews suggest that universal indicators of interdisciplinarity cannot be meaningfully developed and that, instead, we should switch to radically different analytical approaches. These results are rather humbling for people like myself who worked on methods for ‘measuring’ interdisciplinarity for many years. Yet they are consistent with critiques to conventional scientometrics and efforts towards methods for ‘opening up’ evaluation, as discussed, for example, in ‘Indicators in the wild’.

From indicators to indicating of interdisciplinarity

Does it make sense, then, to try to assess the degree of interdisciplinarity? Yes, it may make sense as far as the evaluators or policy makers are specific about the purpose, the contexts and the particular understandings of interdisciplinarity that are meaningful in a given project. This means stepping out of the traditional statistical comfort zone and interacting with relevant stakeholders (scientists and knowledge users) about what type of knowledge combinations make valuable contributions –acknowledging that actors may differ in their understandings.

Making a virtue out of necessity, Marres and De Rijcke highlight that the ambiguity and situated nature of interdisciplinarity allow for ‘interesting opportunities to redefine, reconstruct, or reinvent the use of indicators’, and propose a participatory, abductive, interactive approach to indicator development. In opening up in this way the processes of measurement, they bring about a leap in framing: from indicators (as closed outputs) to indicating (as an open process).

Marres and De Rijcke’s proposal may not come as a surprise to project evaluators, who are used to choosing indicators only after situating the evaluation and choosing relevant frames and criteria – i.e., in fact evaluators are used to indicating. But this approach means that aggregated or averaged measures are unlikely to be meaningful.

In an ensuing blog, I will argue, however, that in order to reflect on knowledge integration to address societal challenges, we should shift from a narrow focus on interdisciplinarity towards broader explorations of research portfolios.

Header image: "Kaleidoscope" by H. Pellikka is licensed under CC BY-SA 3.0.

3 Comments

Following Ervin Laszlo, disciplines are artefacts; they constitute a limitation on the type and on the number of elements and interactions that are taken into account. However, there are no boundaries in nature that match with the boundaries of disciplines. Therefore, although disciplines are, in some cases, a necessary self-limitation, their boundaries should be considered permeable, expandable, and transferable. In any case, it is necessary to reject the idea that one has to accept an exclusion alternative; rather, it is important to shuttle between disciplines, in order to integrate, contextualize and cross-fertilize them.

For a quantitative operational definition I would suggest to define the Interdisciplinary degree as connected to the number of involved disciplines and to the number of specific, logical, mutual discipline interactions.

Dear Loet,

many thanks for your comments.

I agree with you that interdisciplinarity is not a goal on itself, but a way of creating new types of knowledge sometimes (perhaps often) aiming at synergy. Your operationalisation is very interesting and relevant indeed.

In the case of "interdisciplinarity as convergence" (which was NSF framing in the workshop that triggered this blog), though, the goal is not even synergy itself but addressing societal challenges — for which synergy is useful, but not necessarily the only way forward. This is what we discuss in the ensuing blog.

https://leidenmadtrics.nl/articles/knowledge-integration-for-societal-challenges-from-interdisciplinarity-to-research-portfolio-analysis

I do hope that we can continue this discussion!!

Ismael Rafols

The measurement of interdisciplinarity and synergy in the Triple Helix model

“Interdisciplinarity” is not an objective in itself, but a means for creating “synergy.” Problem-solving often requires crossing boundaries, such as those between disciplines. When policy-makers call for “interdisciplinarity,” however, they mean “synergy.” Synergy is generated when

the whole offers more possibilities than the sum of its parts. The measurement of “synergy,” however, requires a methodology very different from “interdisciplinarity.” The same information can be appreciated differently by different disciplines. Whereas information can be communicated, meanings can be shared.

Sharing of and translations among meanings can generate an intersubjective overlay with a dynamic different from information processing. The redundancy in the loops and overlaps can be measured as feedbacks which reduce uncertainty (that is, negative bits). Meanings refer intersubjectively to “horizons of meaning” that are instantiated in events. Whereas the events are historical, appreciations are analytical. Knowledge-based distinctions add to the redundancy by specifying empty boxes.

For example, in bio-medical “translation research” (from bench to the bedside) or university-industry-government relations, synergy is more important than interdisciplinarity. The external stakeholders structure the configuration; this structuring can be appreciated in the case of synergy. Synergy measures options that have not-yet occurred and thus shifts the orientation from the measurement of past performance to future perspectives indicated in terms of redundancies.

Loet Leydesdorff

Universtiy of Amsterdam 10 December 2020

Reference

Leydesdorff, L., & Ivanova, I. A. (in print). The Measurement of “Interdisciplinarity” and “Synergy” in Scientific and Extra-Scientific Collaborations. Journal of the Association for Information Science and Technology, https://doi.org/10.1002/asi.24416

Add a comment