The Role of University Rankings in Research Evaluation Revisited

How are rankings used in research evaluation and excellence initiatives? The author presents a literature review using English and Russian sources, as well as gray literature. The Russian case is highlighted, where rankings have had an essential role in research evaluation and policy until recently.

Since their inception in 2003, global university rankings have gained increasing prominence within the higher education landscape. Originally designed as marketing and benchmarking tools, the rankings have transformed into influential instruments for research evaluation and policymaking. Acknowledging the vast impact they have had on the field, my recent study aims to provide an extensive overview of the literature pertaining to the use of university rankings in research evaluation and excellence initiatives.

The paper presents a systematic review of existing literature on rankings in the context of research evaluation and excellence initiatives. English-language sources primarily constitute the basis of this review, although additional coverage is given to literature from Russia, where the significance of rankings in the national policy was emphasized in the title and objective of the policy project 5top100 (the review covers only a relatively small part of Russian-language literature on rankings; an extended study of the Russian academic literature is available here). Furthermore, gray literature has also been included for a comprehensive understanding of the topic.

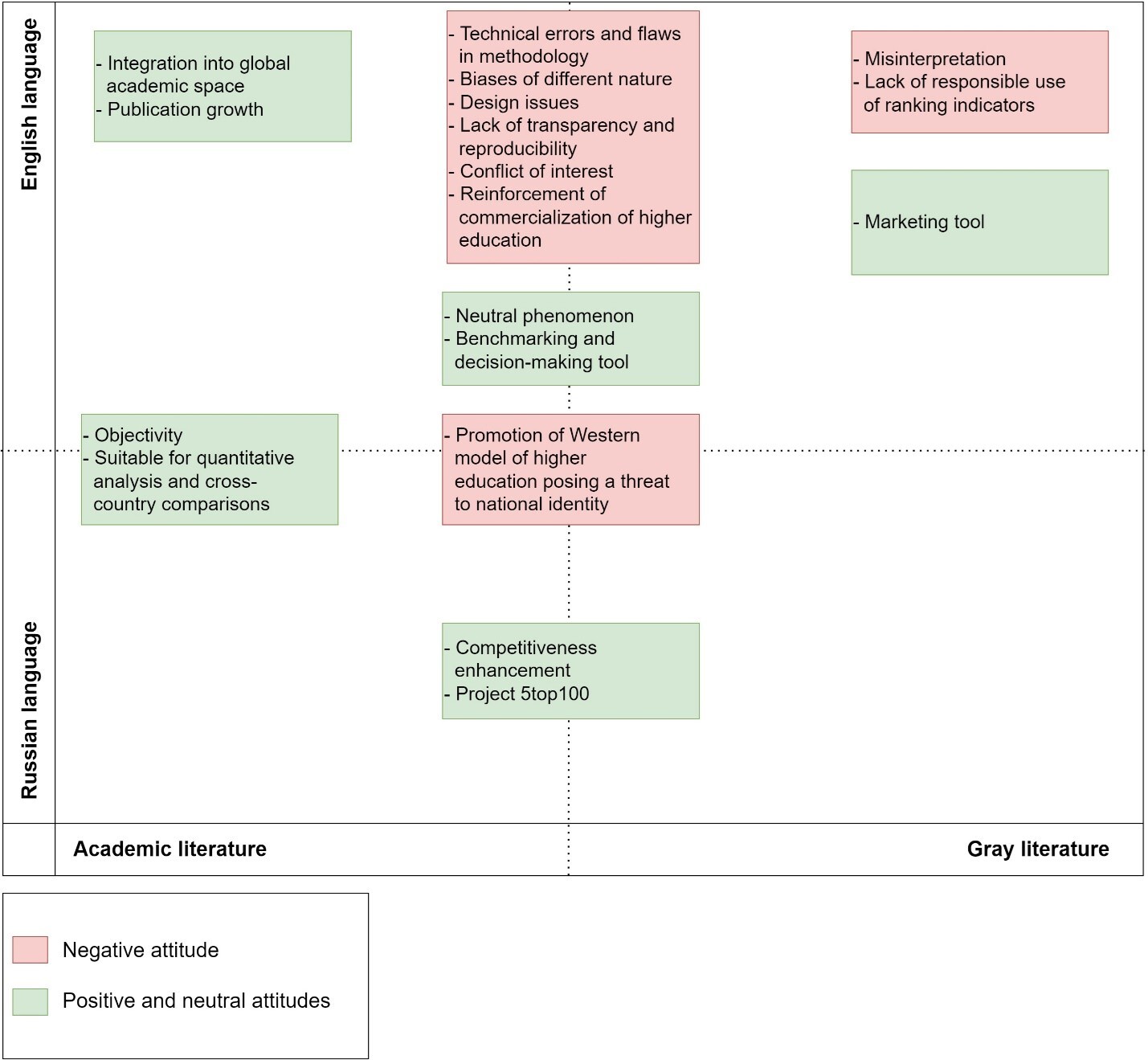

The attitudes towards university rankings and their use in research evaluation in academic and gray literature

The literature analysis reveals a prevailing academic consensus that rankings should not be employed as a sole measure of research assessment and indicators of the national policies. Rankings can be used for marketing and benchmarking functions, but in a responsible way by recognizing their limitations. Various authors have highlighted technical and methodological flaws, biases of different nature (including biased evaluation of both universities and individual researchers), conflicts of interest, and risks to national identity. Criticism is mainly focused on rankings that use a composite indicator as a single overall measure of university performance (QS, THE, and ARWU). Rather few studies demonstrate a positive or neutral attitude towards global university rankings. The key findings from the literature are summarized in the diagram below.

It is noteworthy to observe that the perception of global university rankings in gray publications has undergone a substantial evolution. During the 2000s and early 2010s, there existed a favorable outlook on university rankings, with policymakers and certain academics endorsing their use to gauge the excellence of higher education institutions on a global scale, to some extent. In recent years, there has been a discernible shift in the perspective in the gray literature towards university rankings, becoming more inclined towards a critical view.

Rankings and research evaluation in the Russian science system

Rankings played an incredibly significant role in Russian national policy. In 2013-2020, the Russian government implemented the 5top100 project aimed at getting five Russian universities into the top 100 of global university rankings. The goal of the project was not achieved, but some experts noted positive changes, primarily an increase in the number of publications by Russian universities. The 5top100 project was continued within the framework of the Priority-2030 program. This ongoing policy initiative is also aimed at promoting the development and advancement of national universities. One distinct aspect of this program is its rejection of global university rankings as the sole basis of evaluation. By moving away from a narrow reliance on global rankings, the program aims to foster a more holistic and context-specific approach to assessing the performance and progress of Russian universities. However, a considerable proportion of evaluation in Priority-2030 still depends on quantitative indicators also used in the methodologies of global university rankings, in particular the scoring of publications based on the indexing of journals in Scopus or Web of Science.

Despite the rosy reports of Russian universities moving up in the rankings and increasing their publications, it suddenly turned out that the country did not have enough technological competencies to produce car paint or starter cultures for dairy products. The situation appears to be even more concerning when examining the analysis of ranking positions for 28 Russian universities that sought consulting services from Quacquarelli Symonds (QS) between 2016 and 2021 (see the analysis by Igor Chirikov). The findings revealed an unusual increase in ranking positions that could not be justified by any observable changes in the universities' characteristics, as indicated by national statistics. This phenomenon, termed "self-bias" by the author, raises questions about the validity of these rankings. It is worth noting that in the latest edition of the QS ranking, all Russian universities experienced a significant decline in their positions. This begs the question: were Russian universities performing well previously, and has there been a noticeable deterioration in their activities lately? Overall, it is my belief that relying solely on university rankings cannot provide a reliable answer to these inquiries.

Back to the literature review, I must admit that the scope of topics covered in Russian-language literature regarding rankings is remarkably limited, with the dominant theme revolving around competitiveness. Furthermore, there is a striking lack of overlap between academic and gray literature in Russian compared to English-language sources. In fact, the only point of intersection is the neo-colonial discourse, which considers rankings as a tool for promoting the Western model of higher education. Some authors also point out the difference between the Western and Russian model of higher education, for instance, Lazar (2019). In Western countries, science and higher education have traditionally coexisted within universities, with applied research being actively developed through the participation of businesses. In contrast, in Russia, since the times of the USSR, there has been a "research triad" consisting of the Academy of Sciences, industry design bureaus, and research institutes, while universities and industry institutes have primarily focused on personnel training with government funding. As a result, both fundamental and applied research has historically been largely conducted outside of universities in Russia.

The topic of responsible research evaluation is poorly developed in Russia. Regrettably, this indicates the isolated nature of Russian science and educational policy debates, which obviously hampers the prospects for development.

What to do in this situation?

I believe the problem described above concerns not only Russia. Although the degree of influence is different, the rankings continue to shape national and institutional strategies in higher education practically all over the world.

I have emphasized the inherent complexity of dismissing rankings outright since they continue to serve as effective marketing tools. Having said that, I must also acknowledge the complexity of cultural change. Even now some people believe that university rankings measure the international prestige of the country “based on the objective assessment by experts from different countries of the significant achievements of universities.”

A recent report by the Universities of the Netherlands proposed a strategy deal with university rankings in more responsible ways. This entails the implementation of initiatives at three levels. In the short term, universities should embark on individual initiatives. In the medium term, coordinated initiatives should be undertaken at the national level, such as collaborative efforts by all universities in the Netherlands. In the longer term, coordinated initiatives are to be set up at the international level.

At present, I would suggest focusing on several specific stops:

- Stop evaluating academics based on university ranking indicators. Start rewarding the contributions of faculty and researchers in all areas of university activities.

- Stop constructing university strategies based on university rankings. Do not use ranking tiers in analytical reports for management decision making; instead, focus on the actual contributions made by a university (scientific, educational, and societal).

- Stop evaluating universities based on ranking indicators. Every university has a unique mission, and only fulfillment of this mission really matters.

- Stop using ranking information in national strategies and other kinds of ambitions. Only universities’ contributions to national and global goals should be considered.

- Stop paying money for consulting services to ranking compliers. This is a pure conflict of interests.

However, the change starts with each of us. Every time that we say or post in social media something like “My university has advanced N positions in ranking X”, this adds to the validity of rankings in the eyes of potential customers. By refraining from making such statements, every member of academic community can contribute to reducing the harmful impact of rankings.

Competing interests The author is affiliated with the Centre for Science and Technology Studies (CWTS) at Leiden University, which is the producer of the CWTS Leiden Ranking and is engaged in U-Multirank. The author is also a former employee of the Ministry of Science and Higher Education of the Russian Federation that implemented the Project 5top100 excellence initiative. No confidential information received by the author during the period of public service in the Ministry was used in this paper.

Header image

Nick Youngson

0 Comments

Add a comment