How important are bibliometrics in academic recruitment processes?

In a newly published paper in Minerva I have analyzed confidential reports from professor recruitments in four disciplines at the University of Oslo. In the paper I show how bibliometrics are used as a screening tool and not as a replacement for more traditional qualitative evaluation of candidates.

The use of metrics is a hot topic in science, and scholars have criticized metrics for being more driven by data than by judgements. Both the Leiden Manifesto and the DORA-declaration, signed by thousands of organizations and individuals, have expressed concerns about the use of metrics on individuals. Despite the critique metrics are used in academic hiring processes which represent critical junctures for academics deciding on their future careers. Although metrics are used in academic recruitment processes, there are few studies on how and with which importance they are used, and whether metrics have replaced or only supplemented the more traditional qualitative candidate evaluation. In a newly published paper in Minerva I cover this research gap and show how metrics are applied chiefly as a screening tool to decrease the number of eligible candidates and not as a replacement for peer review.

The lack of knowledge of how metrics are used in recruitments could partly be due to the secrecy of these processes, where what happens is often highly confidential. However, with access to confidential documents from 57 professor recruitments in sociology, physics, informatics and economics between 2000 and 2017 at the University of Oslo, I was able to explore these black boxes. Going beyond more superficial accounts of whether metrics were used or not, I could unpack the evaluation of candidates and explore how metrics were used. With content analysis in NVivo I identified which criteria were used, when these criteria were used and how important they were for the ranking of the candidates.

These documents showed that research experience was the most important criterion in recruitment processes, while the candidates’ teaching and dissemination experience were less valued. In these evaluations, metrics of research output were an important criterion but seldom the most important one. Contrary to the literature suggesting an escalation of metrics in academic recruitment, I instead detected foremost stable assessment practices with only a modestly increased reliance on metrics. Furthermore, paying attention to the candidate’s volume of publications is no entirely new phenomena either, but has been a practice throughout the time period observed in my study. Still, I found a moderate increased reliance on metrics in these evaluations.

In the evaluation of candidates bibliometric indicators were primarily applied as a screening tool to reduce high numbers of candidates and not as a replacement of the qualitative peer review of the candidates’ work. It is quite understandable that when universities receive applications by, e.g., over 40 candidates, they are not capable of evaluating them all but need to decrease the number of candidates for more thorough evaluations. Here, bibliometrics have proved to be a useful screening tool.

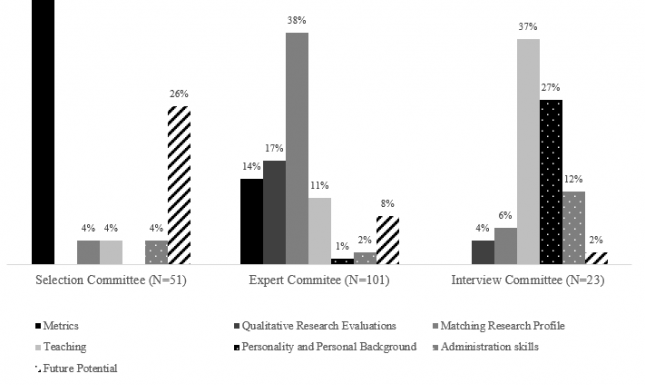

In the figure below I display the most important criteria used in the three different committees which the Norwegian academic recruitment processes consisted of. While metrics were the most important criteria in the selection committees, whose task it is to select eligible candidates, they were inferior when it came to the in-depth reading of the candidates’ work in the expert committees. Here, tenured professors evaluated the candidates according to disciplinary standards. Moreover, in the last stage of the recruitment process, when interviewing the highest ranked candidate, metrics were neglected as the focus for these interviews was to evaluate the candidates’ teaching experience and their social skills.

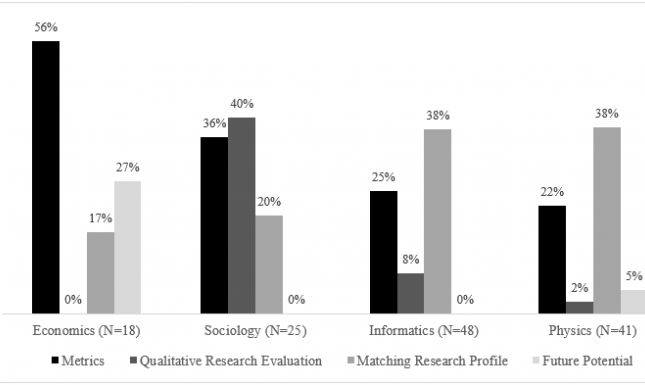

The use of metrics was also strongly dependent on the evaluation cultures of different disciplines. In sociology, the evaluators’ in-depth reading of the candidates’ work was still the most important, and in physics and informatics having the specific skills announced in the call was more important than having impressive publication lists. However, economics was an exception, where the number of top publications proved to be the most salient criterion. The next figure shows the most important criteria in the expert committee, where tenured professors evaluated the candidates.

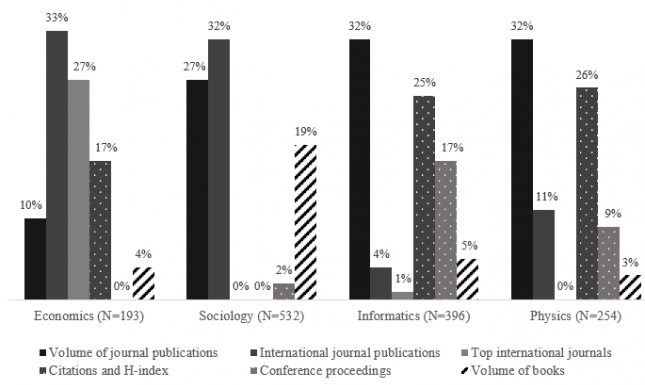

The disciplines further relied on different types of metrics. Social sciences chiefly emphasized publication volumes and journal quality, while the natural sciences relied more strongly on various metrics such as the number of publications, citation scores and the number of conference proceedings. Figure 3 shows how often the different types of metrics were applied by the expert committees.

My study thus reveals a more nuanced view of the use of metrics in the evaluation of individual researchers. Even though metrics are used in academic recruitments, this does not imply a fundamental change of these processes where metrics have overruled traditional peer reviews. Instead, the Norwegian case could hint to a moderate use of metrics, as for instance as a screening tool.

Nevertheless, although the use of metrics was moderate, I have not investigated whether the first screening process eliminated only irrelevant candidates or if the screening also excluded highly qualified candidates. Nor have I investigated more indirect effect, for example if the awareness of this screening makes researchers follow ideas where the prospect of publication in high ranked journals seems more likely. I thus encourage scholars to more closely study the effect of the use of metrics.

0 Comments

Add a comment