Bona Fide Journals - Creating a predatory-free academic publishing environment

Predatory journals pose a significant problem to academic publishing. In the past, a number of attempts have been made to identify them. This blog post presents a novel approach towards a predatory-free academic publishing landscape: Bona Fide Journals.

A recent item in Nature News reports “Hundreds of ‘predatory’ journals indexed on leading scholarly database”, sub-headed “[…] the analysis highlights how poor-quality science is infiltrating literature.”

A year before, a group of leading scholars and publishers already warned in a comment in Nature, "So far, disparate attempts to address predatory publishing have been unable to control this ever-multiplying problem. The need will be greater as authors adjust to Plan S and other similar mandates, which will require researchers to publish their work in open-access journals or platforms if they are funded by most European agencies, the World Health Organization, the Bill & Melinda Gates Foundation and others."

Given the significance of the problem of predatory publishing, QOAM (Quality Open Access Market), in cooperation with CWTS, has started a new initiative to create a predatory-free academic publishing environment: Bona Fide Journals.

The harm

The fine of $50 million imposed by a U.S. federal judge to OMICS reflects the material damage this publisher caused over the years 2011 to 2017. Given the long list of predatory publishers, it seems only a modest guess to multiply this $7 million per year by 3 to have an indication of the harm caused by all of them together, making it roughly $20 million annually. This figure might be growing under the current publication pressure, while, at the same time, predatory journals simply pass the compliance test of the newly launched Plan S Journal Checker Tool.

On top of that is the immaterial, hard to quantify damage to the reputation of misled authors and falsely advertised editors and reviewers. This affects the authority of the whole fabric of science. “Publications using such practices may call into question the credibility of the research they report,” according to the US National Institutes of Health (NIH). Predatory journals contaminate the scientific and scholarly domain with fake news in a period in which the integrity of science may be more important than ever.

Also, niche journals outside the mainstream of the highly branded portfolios of the big publishing houses are at risk. Jan Erik Frantsvåg conducted a thorough analysis of journals removed from the Directory of Open Access Journals (DOAJ) in their 2016 grand cleansing operation. His resonating conclusion is that “there is nothing […] that indicates that the journals that were removed were of inferior scholarly quality compared to those remaining.” In such a banished mix the good suffer from the evil and a differentiating service is urgently needed. Till today, this problem continues. See here and here and here.

The remedy

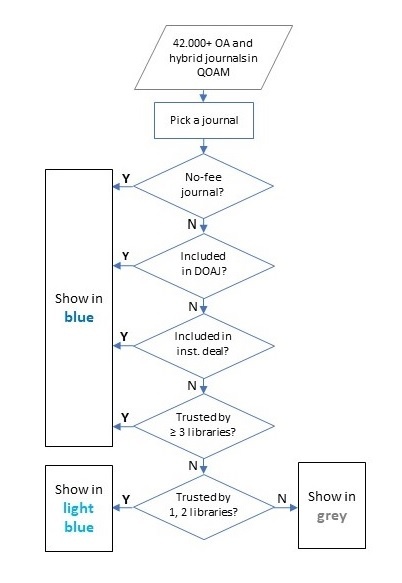

Bona Fide Journals. Colours in the flowchart mark the level

of trustworthiness: blue sufficient, light blue partial, grey no.

Setting up a list of predatory journals has proven problematic (see here and here). Conversely, creating a list of trustworthy journals outcasts honest journals that did not make it to the list. Other attempts concern lists of criteria for ‘predatory-ness’, leaving it to individual researchers to check a specific journal (see here (paywalled) and here).

Recently, QOAM in cooperation with CWTS, launched a new initiative: Bona Fide Journals. Starting with all 42,000 journals in QOAM, the idea is to indicate the journals in the list which are deemed non-predatory, either because they are no-fee journals or because they are approved by library professionals. Examples of the second category are journals in DOAJ and journals included in institutional deals.

However, this still leaves thousands of journals unaddressed. Bona Fide Journals will enable institutional libraries to express their trust in these journals. The expectation is that most libraries are familiar with a number of niche journals from institutions, societies or charities in their own discipline, region, or language.

The only thing libraries have to ascertain is that a journal is not mala fide. In case of doubt, the Compass to Publish may offer practical guidance. If a journal has gained expressions of trust from three different libraries, it will be included in the list of trustworthy journals. Thus, libraries may resume their role as quality guardians of the academic publishing domain.

Next to DOAJ, other sources may be included in the second diamond of the flowchart (see Figure 1), such as high-profile indexing services or recognized platforms like Redalyc, SciELO, OpenEdition, or African Journals Online.

What is available now?

Today, Bona Fide Journals is operational as a minimal viable product, soliciting user comments to guide further development. Potential current use cases are:

- A (spammed) researcher checking the trustworthiness of a journal which solicits their article.

- A library suggesting a specific collection of trustworthy journals for a project or an institute.

- An open access publishing service using the list of Bona Fide Journals to filter out predatory journals that may have infiltrated into their journal base.

What is next?

Bona Fide Journals offers basic evidence of the honesty of journals, but journals may still differ a lot when it comes to peer review, editing, data transparency, speed of publication, author evaluation, or publication fee. There are several websites that provide useful information on these aspects, but authors who wish to select a journal in which to publish their article may easily get lost when trying to find the most relevant information. Therefore, a next step that we hope to take is to develop an infrastructure that brings together the diversity of such services in a more systematic way. Instead of reducing the selection of a journal to a simplistic dichotomy (mainstream vs. unfamiliar) or a questionable one-dimensional ranking (journal impact factor), such an infrastructure offers researchers an informative variety of publishing options and enables the scientific community to optimally benefit from these options.

3 Comments

Dear Leo,

First, I have to express my admiration with Ivan's diplomatic skills, because the issues pointed out by him are usually expressed in much harsher terms - and deservedly so.

You write,

"So, this incidental order does not mean that CWTS is promoting this journal in any way."

This is plainly false, unless a specific warning is placed at every access point to the functionality. And even more false, given that the section "What is available now?" lists some "current" (not "future"!) usecases.

Hence, please consider, as a bare minimum:

(1) Placing a visible warning against any policy-making / advertising based on the current classification of journals as blue / light blue / grey.

(2) Rewriting the section "What is available now?" and de-listing all "current" usecases.

Overall, I agree with Ivan that "the proposed simple solution is not entirely up to the task", or even rather, "the proposed simple solution is entirely not up to the task", just like any solution operating with bare numbers and excluding any expert analysis.

Also, imagine an extreme case, when a journal published by some top player on the market gets infested with papermill products. Which typically boosts the journal in terms of customer satisfaction. Will the journal go up your rating then? Do you really want that to happen?

Sincerely,

Alexander Magazinov

Hello,

I'm also studying dubious journals as a research administrator\researcher, see

https://arxiv.org/abs/2003.08283 forthcoming this year in Scientometrics - just a bit later than Macháček and Srholec, but based on 2016 work https://herb.hse.ru/data/2016/03/02/1125175286/3.pdf also presented at Nordic Workshop for Bibliometrics https://figshare.com/articles/presentation/Potentially_Predatory_Journals_in_Scopus_Descriptive_Statistics_and_Country-level_Dynamics_NWB_2016_presentation_slides_/4249394/1

This here is an interesting development, but it's strange to see MDPI's Sustainability topping the blue list. I'm afraid this raises some questions regarding the project as a whole.

For several years I was engaded in journal quality control of the leading russian university's monetary bonus program based on publication counts (not recommended), and we had to delist this one after examination of papers that Russian authors were publishing there, and other issues:

Imo journal lacks focus sometimes to the extent of "anything goes, just add 'sustainable' somewhere in the title or abstract" (officially it is "a journal of environmental, cultural, economic, and social sustainability of human beings"). Other concerns include a _combination_ of:

- paid OA (don't get me wrong, paid OA is not 'bad' per se)

- soaring publication counts - more than tenfold since 2015, now whopping 10k papers in 2020 alone - the fouth in the World after Scientific Reports, IEEE Access and PLOS One

- VERY quick peer review ("manuscripts are peer-reviewed and a first decision provided to authors approximately 14.7 days after submission")

compare to ~45 days for PLOS One and SR.

- assessment of MDPI by Nordic experts incl Gunnar Sivertsen ('borderline', see wikipedia entry)

- SciRev reviews https://scirev.org/reviews/sustainability/

To see CWTS kinda promoting this journal and facilitating MDPI's income is confusing. I'm afraid the proposed simple solution is not entirely up to the task. Yes, I've read the QoS description (not quality of content, but quality of service), but we all know how these rankings are perceived by inexperienced scholars outside prominent universities. And, frankly, why start by ranking by service and not quality (this also applies to DOAJ seal, which is similarly confusing for the most vulnerable authors and easily achieved by publishers)? Don't you think that some people are effectively ranking "ease of quickly getting WoS\JIF\Scopus paper" above all?

Also, it would be great if you provide exact calculation of QoS indicator for Sustainability.

Sorry if my comments seem pressing, but for a research administrator in a country badly stricken by pseudoscience stemming from government's simplistic metrics usage, this whole issue is very important.

Best regards,

Ivan Sterligov

ivan.sterligov@gmail.com

PS to highlight another unexpected case, MDPI's Publications journal is blue, and Ludo Waltman's excellent QSS by MIT Press is grey.

Dear Ivan,

Thank you very much for your comments on MDPI’s journal Sustainability topping the blue list in Bona Fide Journals. In the current version of BFJ the journals are sorted according to their Quality-of-Service indicator in Quality Open Access Market – QOAM. In future versions other sorting option will be included. So, this incidental order does not mean that CWTS is promoting this journal in any way.

In fact, the QoS reflects authors’ appreciations of the service of a journal. The specific data for this journal may be found here. It concerns 100 scorecards with an average score of 4.3. This score is multiplied by its ‘robustness’, relating the number of scorecards N to the number of articles A of the journal, in this case A = 10.000. The formula for the robustness may be found here, in this case resulting in 2,4. So, robustness times average score gives 10,5.

At the journal page you will not only find the links to every single score card but also an overview of the additional comments. Although some are critical, most authors seem pretty satisfied, specifically with the publication speed.

This brings me back to the final paragraph of the blog “What is next?”. As you may read there, Bona Fide Journals lies only at the basis of a broader ambition to combine a number of services that give information about various aspects of academic journals. Such a combination will create an information rich publishing environment enabling authors to make a well-informed choice for the journal they wish to publish their article in, and also give the academic community a more-dimensional awareness of the notion ‘quality of a journal’. So, please keep following us (critically).

Finally, you are absolutely right with respect to QSS. The journal should be blue of course. Your observation laid bare a bug in our software which we hope to have fixed asap.

Leo Waaijers.

Add a comment